Publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2024

- AI Review

Link prediction for hypothesis generation: an active curriculum learning infused temporal graph-based approachUchenna Akujuobi*, Priyadarshini Kumari, Jihun Choi, and 4 more authorsIn Artificial Intelligence Review, 2024

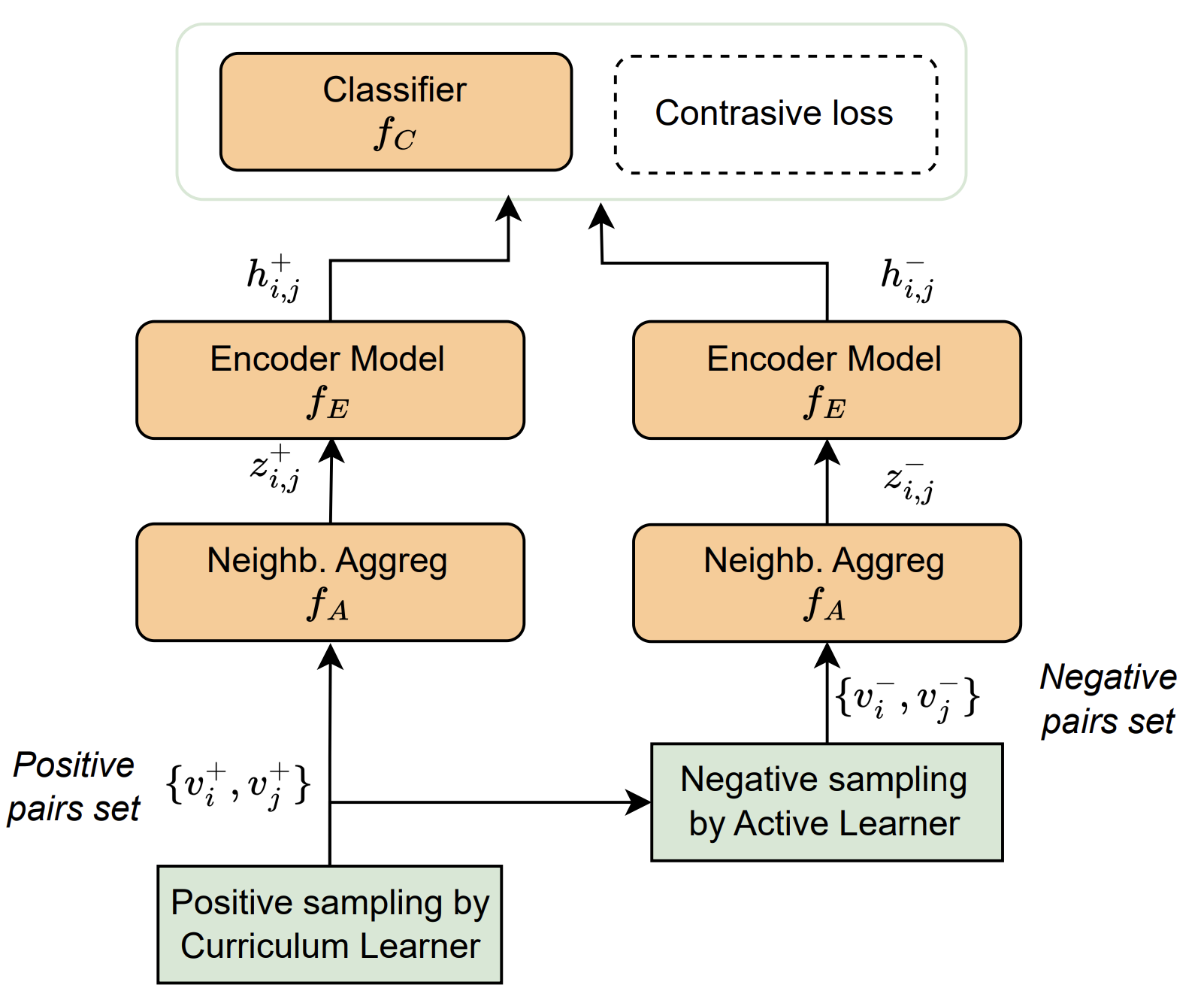

Link prediction for hypothesis generation: an active curriculum learning infused temporal graph-based approachUchenna Akujuobi*, Priyadarshini Kumari, Jihun Choi, and 4 more authorsIn Artificial Intelligence Review, 2024Over the last few years Literature-based Discovery (LBD) has regained popularity as a means to enhance the scientific research process. The resurgent interest has spurred the development of supervised and semi-supervised machine learning models aimed at making previously implicit connections between scientific concepts/entities explicit based on often extensive repositories of published literature. Understanding the temporally evolving interactions between these entities can provide valuable information for predicting the future development of entity relationships. However, existing methods often underutilize the latent information embedded in the temporal aspects of interaction data. In this context, motivated by applications in the food domain—where we aim to connect nutritional information with health-related benefits—we address the hypothesis-generation problem using a temporal graph-based approach. Given that hypothesis generation involves predicting future (i.e., still to be discovered) entity connections, the ability to capture the dynamic evolution of connections over time is pivotal for a robust model. To address this, we introduce THiGER, a novel batch contrastive temporal node-pair embedding method. THiGER excels in providing a more expressive node-pair encoding by effectively harnessing node-pair relationships. Furthermore, we present THiGER-A, an incremental training approach that incorporates an active curriculum learning strategy to mitigate label bias arising from unobserved connections. By progressively training on increasingly challenging and high-utility samples, our approach significantly enhances the performance of the embedding model. Empirical validation of our proposed method demonstrates its effectiveness on established temporal-graph benchmark datasets, as well as on real-world datasets within the food domain.

- BMVC

CosFairNet:A Parameter-Space based Approach for Bias Free LearningRajeev Ranjan Dwivedi, Priyadarshini Kumari, and Vinod Kumar KurmiIn British Machine Vision Conference, 2024

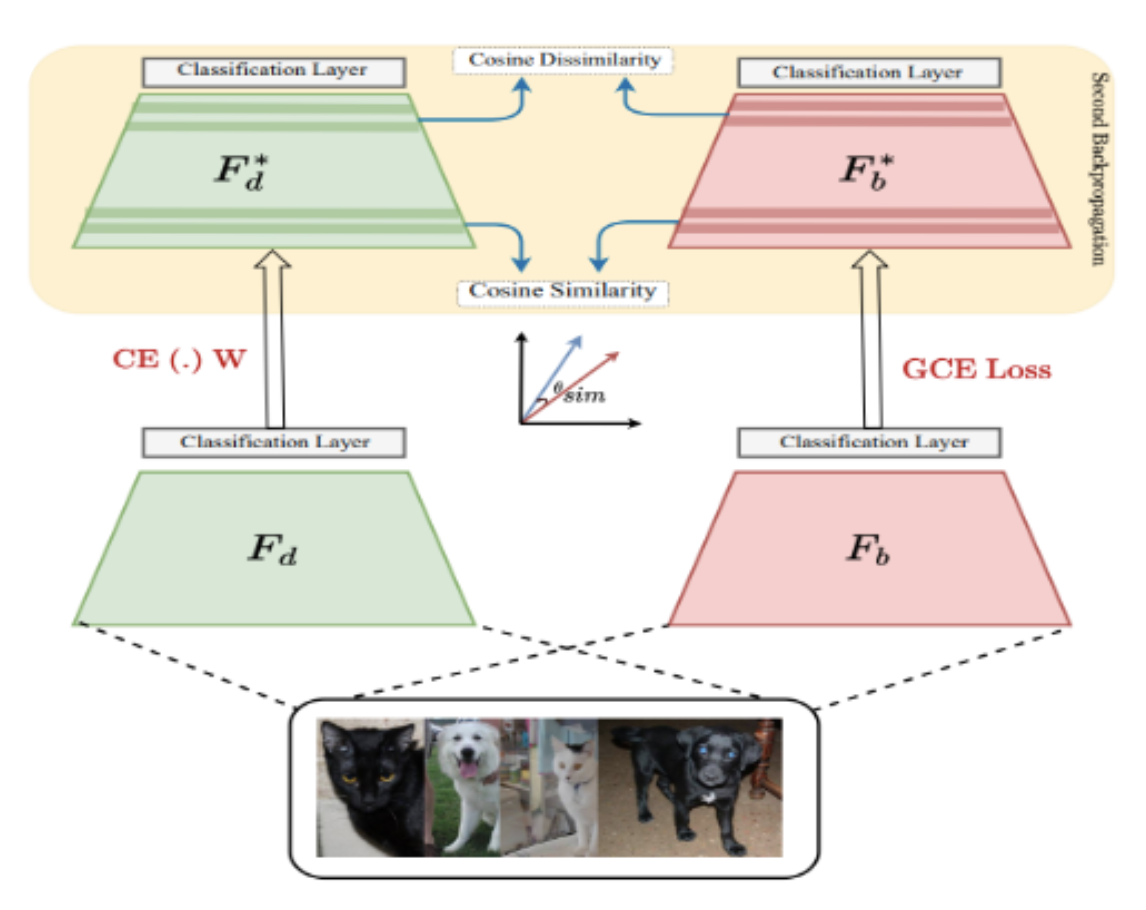

CosFairNet:A Parameter-Space based Approach for Bias Free LearningRajeev Ranjan Dwivedi, Priyadarshini Kumari, and Vinod Kumar KurmiIn British Machine Vision Conference, 2024Deep neural networks trained on biased data often inadvertently learn unintended inference rules, particularly when labels are strongly correlated with biased features. Existing bias mitigation methods typically involve either a) predefining bias types and enforcing them as prior knowledge or b) reweighting training samples to emphasize bias-conflicting samples over bias-aligned samples. However, both strategies address bias indirectly in the feature or sample space, with no control over learned weights, making it difficult to control the bias propagation across different layers. Based on this observation, we introduce a novel approach to address bias directly in the model’s parameter space, preventing its propagation across layers. Our method involves training two models: a bias model for biased features and a debias model for unbiased details, guided by the bias model. We enforce dissimilarity in the debias model’s later layers and similarity in its initial layers with the bias model, ensuring it learns unbiased low-level features without adopting biased high-level abstractions. By incorporating this explicit constraint during training, our approach shows enhanced classification accuracy and debiasing effectiveness across various synthetic and real-world datasets of different sizes. Moreover, the proposed method demonstrates robustness across different bias types and percentages of biased samples in the training data.

2023

- NeurIPS XAIA

FRUNI and FTREE synthetic knowledge graphs for evaluating explainabilityPablo Sanchez Martin, Tarek Besold, and Priyadarshini KumariIn NeurIPS 2023 Workshop XAIA, 2023

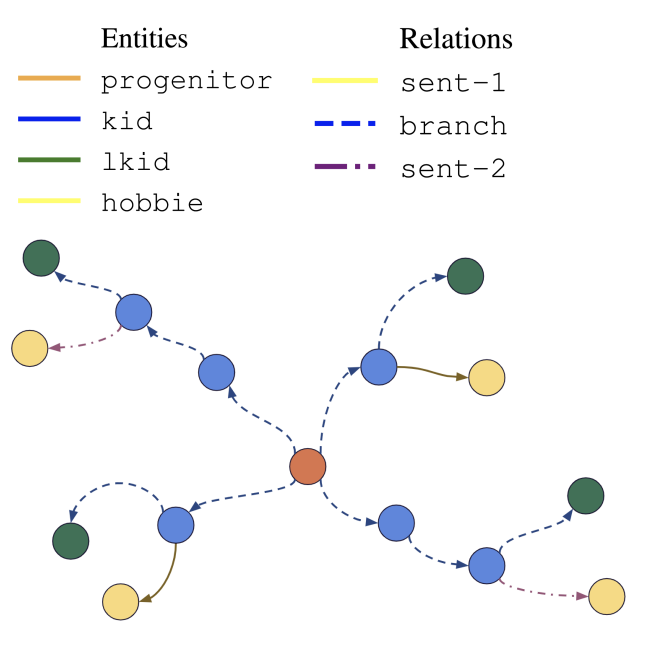

FRUNI and FTREE synthetic knowledge graphs for evaluating explainabilityPablo Sanchez Martin, Tarek Besold, and Priyadarshini KumariIn NeurIPS 2023 Workshop XAIA, 2023Research on knowledge graph completion (KGC)—i.e., link prediction within incomplete KGs—is witnessing significant growth in popularity. Recently, KGC using KG embedding (KGE) models, primarily based on complex architectures (e.g., transformers), have achieved remarkable performance. Still, extracting the \emphminimal and relevant information employed by KGE models to make predictions, while constituting a major part of \emphexplaining the predictions, remains a challenge. While there exists a growing literature on explainers for trained KGE models, systematically exposing and quantifying their failure cases poses even greater challenges. In this work, we introduce two synthetic datasets, FRUNI and FTREE, designed to demonstrate the (in)ability of explainer methods to spot link predictions that rely on indirectly connected links. Notably, we empower practitioners to control various aspects of the datasets, such as noise levels and dataset size, enabling them to assess the performance of explainability methods across diverse scenarios. Through our experiments, we assess the performance of four recent explainers in providing accurate explanations for predictions on the proposed datasets. We believe that these datasets are valuable resources for further validating explainability methods within the knowledge graph community.

- Multimodal SIGKDD

Optimizing Learning Across Multimodal Transfer Features for Modeling Olfactory PerceptionDaniel Shin, Gao Pei, Priyadarshini Kumari, and 1 more authorIn International Workshop on Multimodal Learning at SIGKDD, 2023

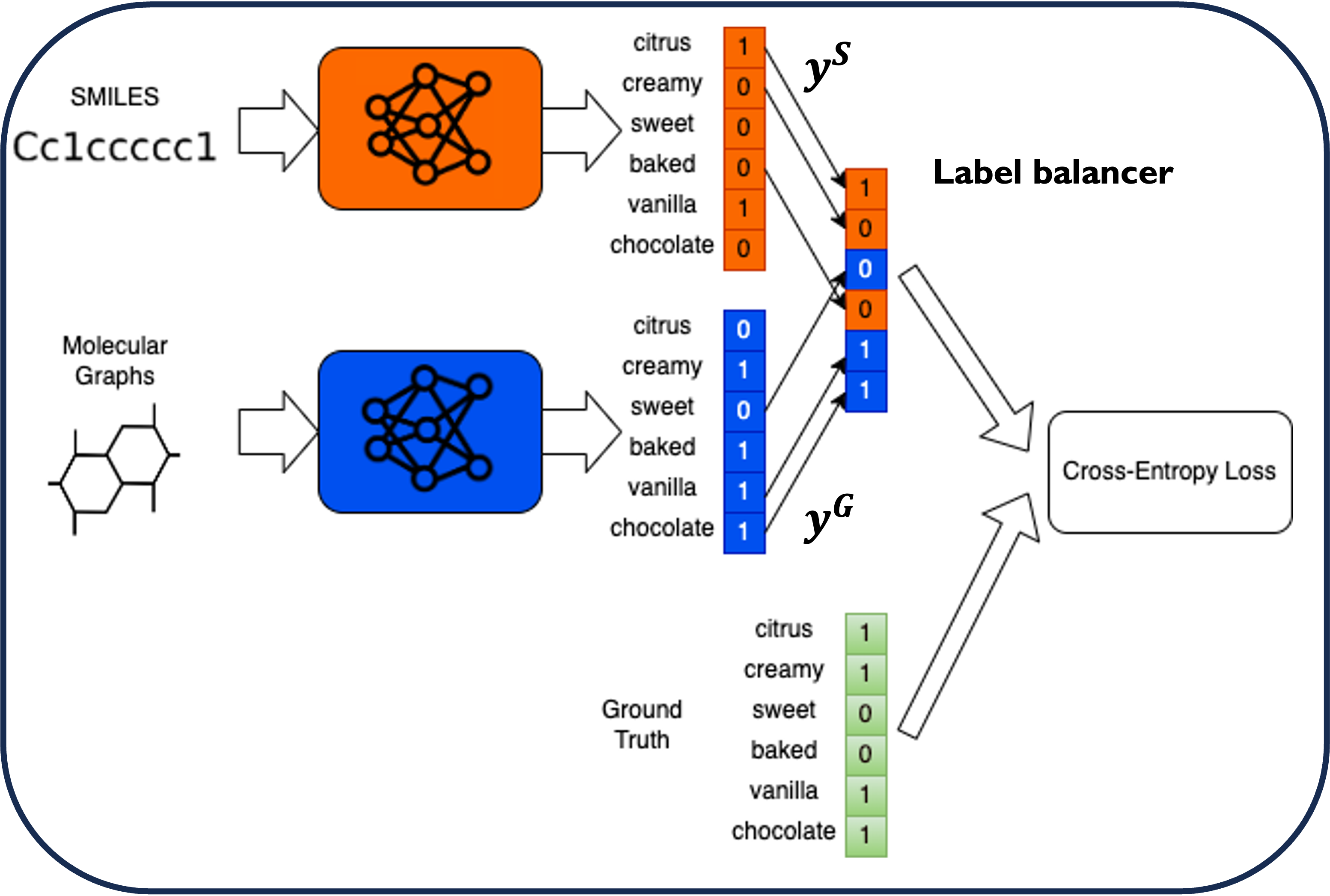

Optimizing Learning Across Multimodal Transfer Features for Modeling Olfactory PerceptionDaniel Shin, Gao Pei, Priyadarshini Kumari, and 1 more authorIn International Workshop on Multimodal Learning at SIGKDD, 2023For humans and other animals, the sense of smell provides crucial information in many situations of everyday life. Still, the study of olfactory perception has received only limited attention outside of the biological sciences. From an AI perspective, the complexity of the interactions between olfactory receptors and volatile molecules and the scarcity of comprehensive olfactory datasets, present unique challenges in this sensory domain. Previous works have explored the relationship between molecular structure and odor descriptors using fully supervised training approaches. However, these methods are data-intensive and poorly generalize due to labeled data scarcity, particularly for rare-class samples. Our study partially tackles the challenges of data scarcity and label skewness through multimodal transfer learning. We investigate the potential of large molecular foundation models trained on extensive unlabeled molecular data to effectively model olfactory perception. Additionally, we explore the integration of different molecular representations, including molecular graphs and text-based SMILES encodings, to achieve data efficiency and generalization of the learned model, particularly on sparsely represented classes. By leveraging complementary representations, we aim to learn robust perceptual features of odorants. However, we observe that traditional methods of combining modalities do not yield substantial gains in high-dimensional skewed label spaces. To address this challenge, we introduce a novel label-balancer technique specifically designed for high-dimensional multi-label and multi-modal training. The label-balancer technique distributes learning objectives across modalities to optimize collaboratively for distinct subsets of labels. Our results suggest that multi-modal transfer features learned using the label-balancer technique are more effective and robust, surpassing the capabilities of traditional uni- or multi-modal approaches, particularly on rare-class samples.

- PLOS ONE

Perceptual metrics for odorants: learning from non-expert similarity feedback using machine learningPriyadarshini Kumari, Tarek Besold, and Michael SprangerIn PLOS ONE, 2023

Perceptual metrics for odorants: learning from non-expert similarity feedback using machine learningPriyadarshini Kumari, Tarek Besold, and Michael SprangerIn PLOS ONE, 2023Defining perceptual similarity metrics for odorant comparisons is crucial to understanding the mechanism of olfactory perception. Current methods in olfaction rely on molecular physicochemical features or discrete verbal descriptors (floral, burnt, etc.) to approximate perceptual (dis)similarity between odorants. However, structural or verbal descriptors alone are limited in modeling complex nuances of odor perception. While structural features inadequately characterize odor perception, language-based discrete descriptors lack the granularity needed to model a continuous perception space. We introduce data-driven approaches to perceptual metrics learning (PMeL) based on two key insights: a) by combining physicochemical features with the user’s perceptual feedback, we can leverage both structural and perceptual attributes of odors to define dissimilarity, and b) instead of discrete labels, user’s perceptual feedback can be gathered as relative similarity comparisons, such as “Does molecule-A smell more like molecule-B, or molecule-C?" These triplet comparisons are easier even for non-experts users and offer a more effective representation of the continuous perception space. Experimental results on several defined tasks show the effectiveness of our approach in evaluating perceptual dissimilarity between odorants. Finally, we investigate how closely our model, trained on non-expert feedback, aligns with the expert’s similarity judgments. Our effort aims to reduce reliance on expert annotations.

- PLOS ONE

Comparing molecular representations, e-nose signals, and other featurization, for learning to smell aroma moleculesTanoy Debnath, Samy Badreddine, Priyadarshini Kumari, and 1 more authorIn PLOS ONE, 2023

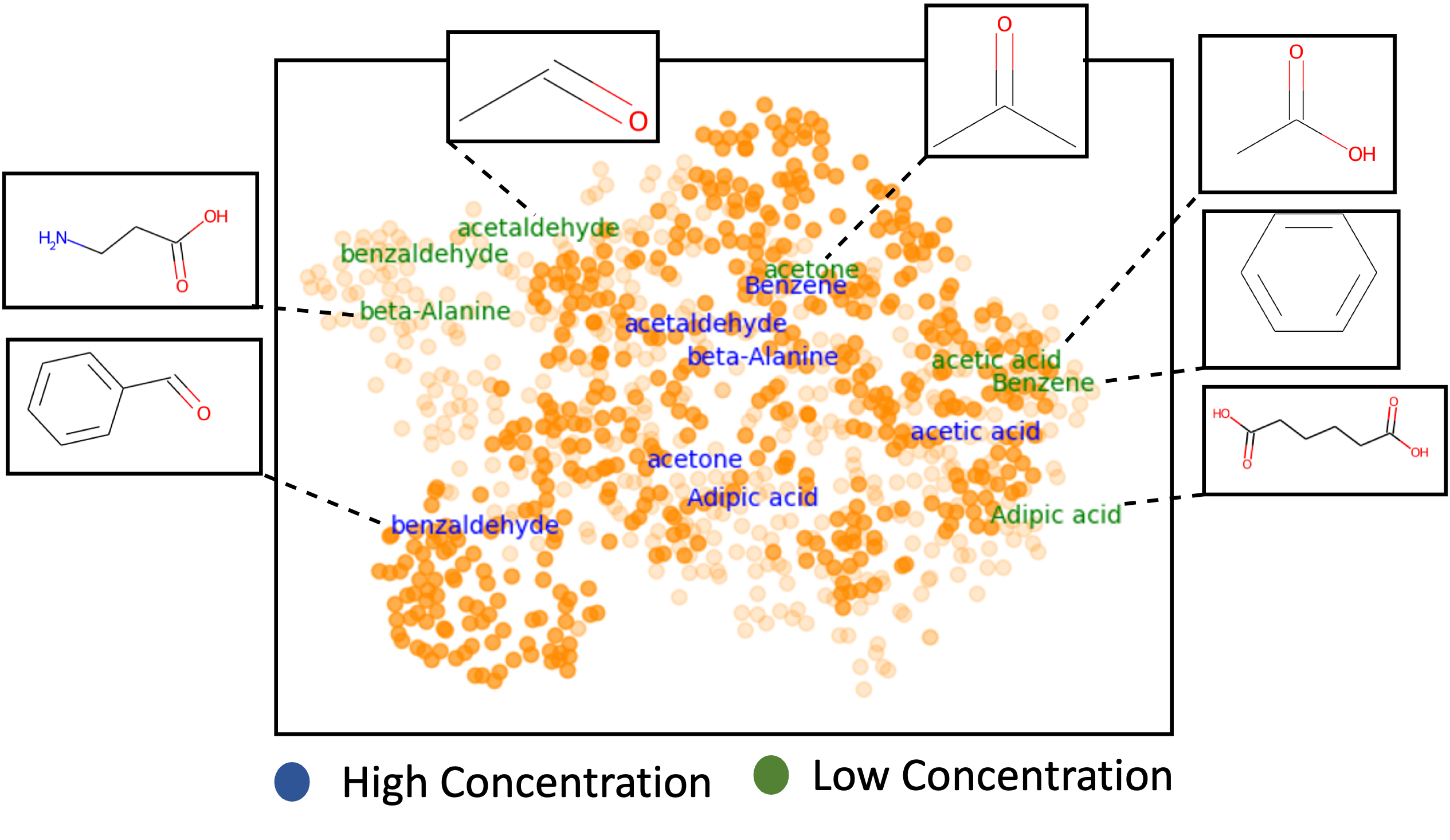

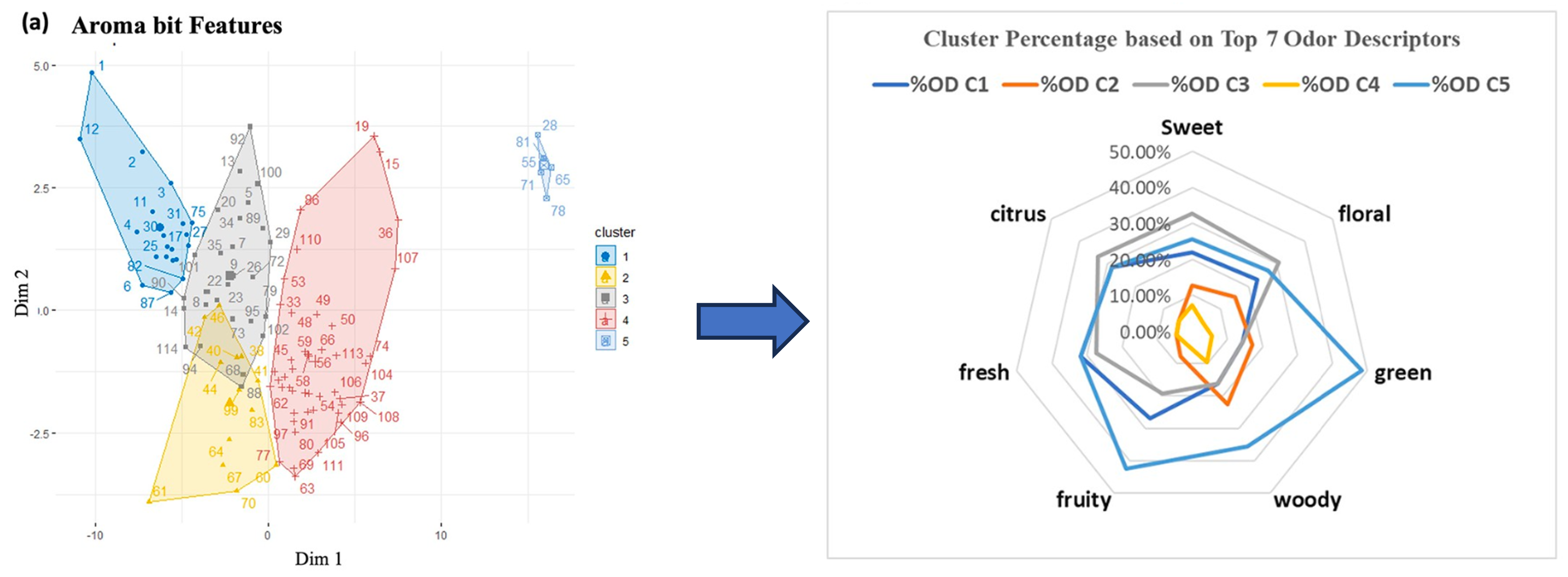

Comparing molecular representations, e-nose signals, and other featurization, for learning to smell aroma moleculesTanoy Debnath, Samy Badreddine, Priyadarshini Kumari, and 1 more authorIn PLOS ONE, 2023Recent research has attempted to predict our perception of odorants using Machine Learning models. The featurization of the olfactory stimuli usually represents the odorants using molecular structure parameters, molecular fingerprints, mass spectra, or e-nose signals. However, the impact of the choice of featurization on predictive performance remains poorly reported in direct comparative studies. This paper experiments with different sensory fea- tures for several olfactory perception tasks. We investigate the multilabel classification of aroma molecules in odor descriptors. We investigate single-label classification not only in fine-grained odor descriptors ("orange," "waxy," etc.) but also in odor descriptor groups. We created a database of odor vectors for 114 aroma molecules to conduct our experiments using a QCM (Quartz Crystal Microbalance) type smell sensor module (Aroma Coder®V2 Set). We compare these smell features with different baseline features to evaluate the cluster composition, considering the frequencies of the top odor descriptors carried by the aroma molecules. Experimental results suggest a statistically significant better performance of the QCM-type smell sensor module compared with other baseline features with the F1 evaluation metric.

2022

- IEEE Haptics Symposium

Enhancing Haptic Distinguishability of Surface Materials With Boosting TechniquePriyadarshini Kumari and Subhasis ChaudhuriIn IEEE Haptics Symposium, 2022

Enhancing Haptic Distinguishability of Surface Materials With Boosting TechniquePriyadarshini Kumari and Subhasis ChaudhuriIn IEEE Haptics Symposium, 2022Discriminative features are crucial for several learning applications, such as object detection and classification. Neural networks are extensively used for extracting discriminative features of images and speech signals. However, the lack of large datasets in the haptics domain often limits the applicability of such techniques. This paper presents a general framework for the analysis of the discriminative properties of haptic signals. We demonstrate the effectiveness of spectral features and a boosted embedding technique in enhancing the distinguishability of haptic signals. Experiments indicate our framework needs less training data, generalizes well for different predictors, and outperforms the related state-of-the-art.

2021

- ECML-PKDD

A Unified Batch Selection Policy for Active Metric LearningPriyadarshini Kumari, Siddhartha Chaudhuri, Vivek Borkar, and 1 more authorIn ECML-PKDD, 2021

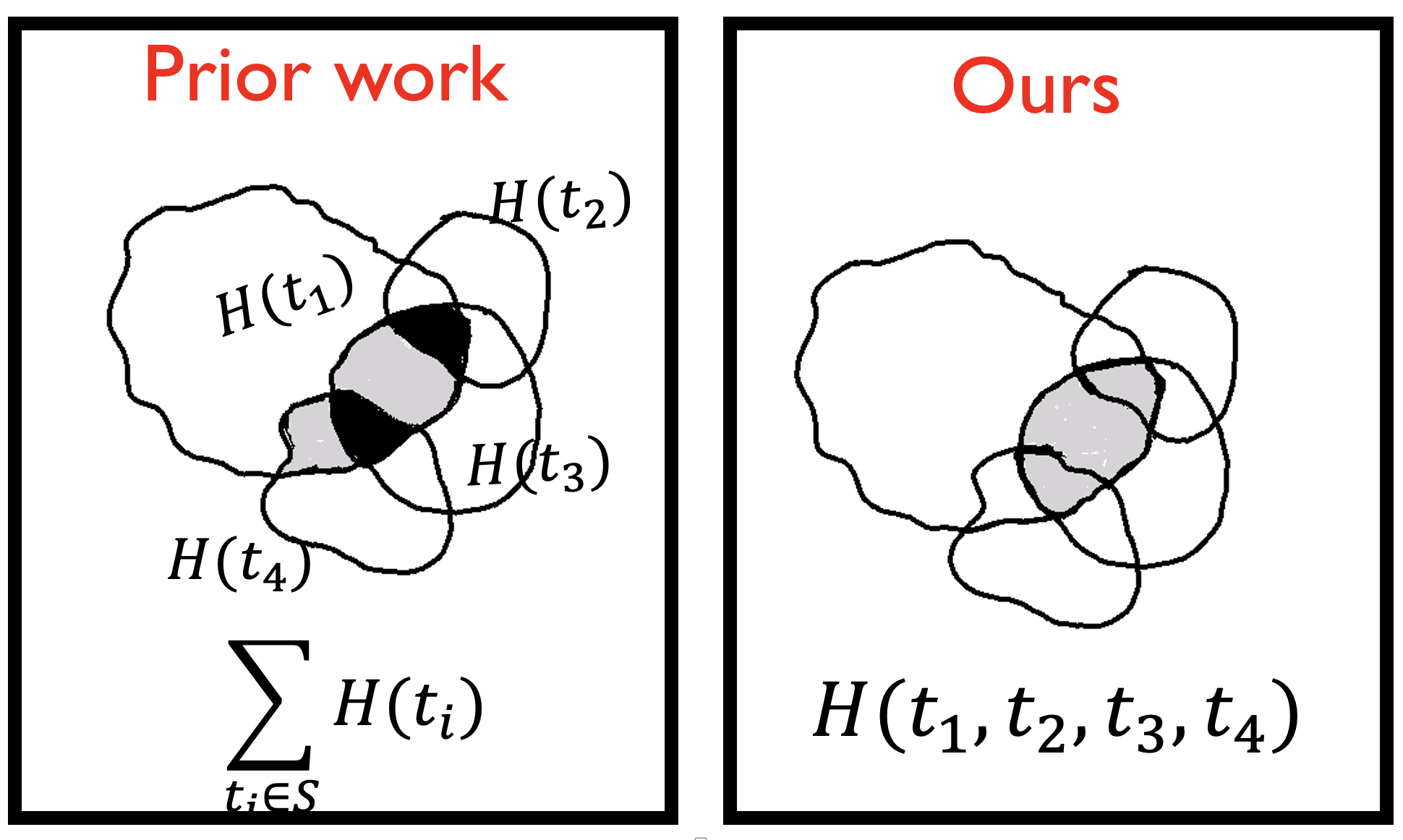

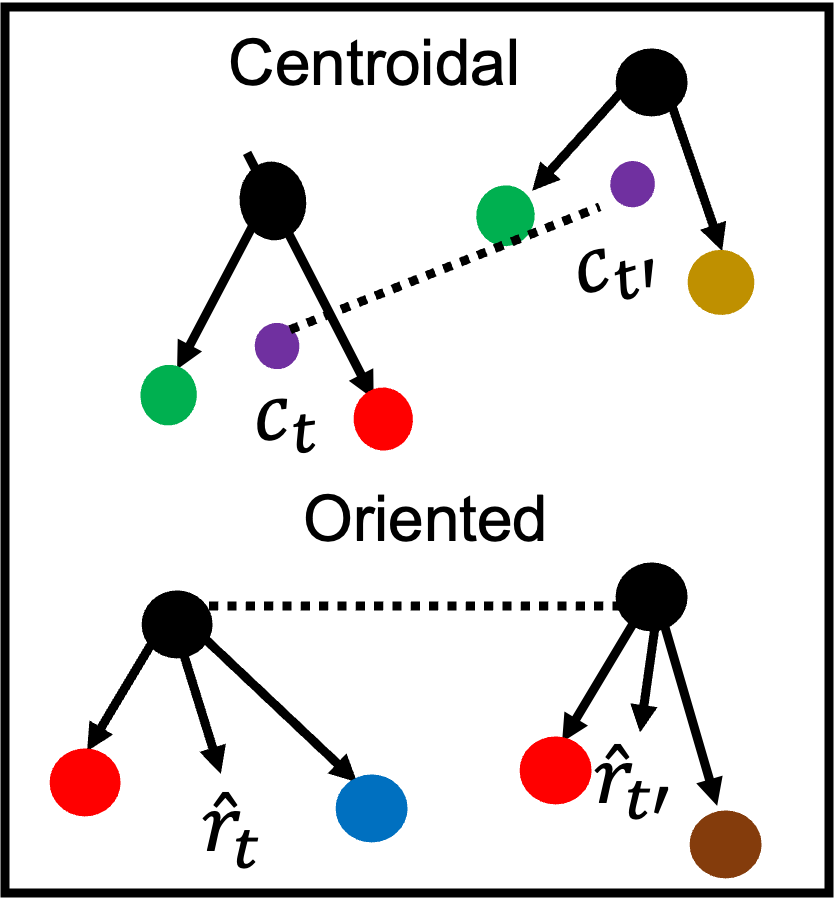

A Unified Batch Selection Policy for Active Metric LearningPriyadarshini Kumari, Siddhartha Chaudhuri, Vivek Borkar, and 1 more authorIn ECML-PKDD, 2021Active metric learning is the problem of incrementally selecting high-utility batches of training data (typically, ordered triplets) to annotate, in order to progressively improve a learned model of a metric over some input domain as rapidly as possible. Standard approaches, which independently assess the informativeness of each triplet in a batch, are susceptible to highly correlated batches with many redundant triplets and hence low overall utility. While a recent work proposes batch-decorrelation strategies for metric learning, they rely on ad hoc heuristics to estimate the correlation between two triplets at a time. We present a novel batch active metric learning method that leverages the Maximum Entropy Principle to learn the least biased estimate of triplet distribution for a given set of prior constraints. To avoid redundancy between triplets, our method collectively selects batches with maximum joint entropy, which simultaneously captures both informativeness and diversity. We take advantage of the submodularity of the joint entropy function to construct a tractable solution using an efficient greedy algorithm based on Gram-Schmidt orthogonalization that is provably (1−\frac1𝑒)-optimal. Our approach is the first batch active metric learning method to define a unified score that balances informativeness and diversity for an entire batch of triplets. Experiments with several real-world datasets demonstrate that our algorithm is robust, generalizes well to different applications and input modalities, and consistently outperforms the state-of-the-art.

- IJCAI

Batch decorrelation for active metric learningPriyadarshini Kumari, Ritesh Goru, Siddhartha Chaudhuri, and 1 more authorIn IJCAI, 2021

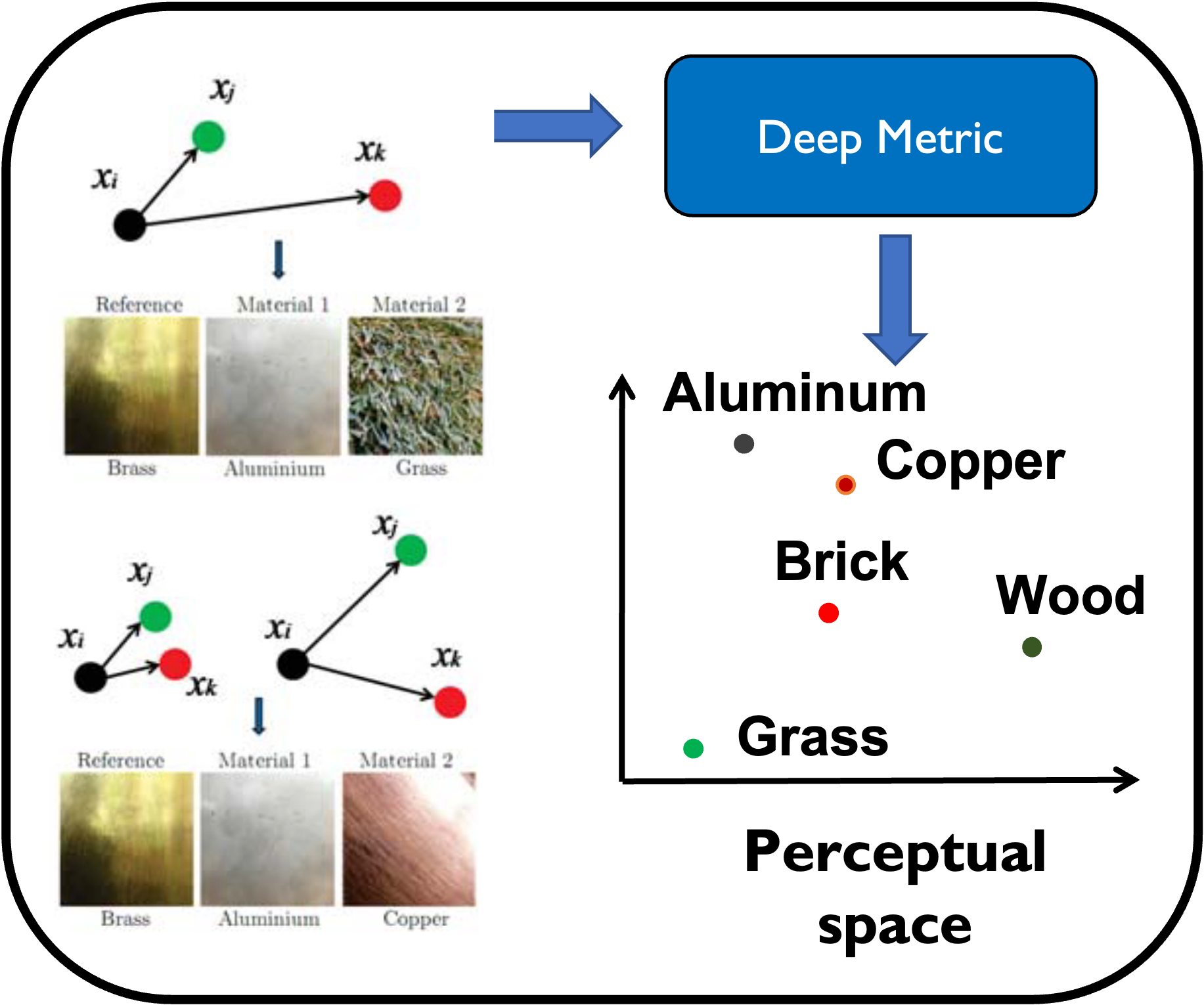

Batch decorrelation for active metric learningPriyadarshini Kumari, Ritesh Goru, Siddhartha Chaudhuri, and 1 more authorIn IJCAI, 2021We present an active learning strategy for training parametric models of distance metrics, given triplet-based similarity assessments: object xi is more similar to object xj than to xk. In contrast to prior work on class-based learning, where the fundamental goal is classification and any implicit or explicit metric is binary, we focus on perceptual metrics that express the degree of (dis) similarity between objects. We find that standard active learning approaches degrade when annotations are requested for batches of triplets at a time: our studies suggest that correlation among triplets is responsible. In this work, we propose a novel method to decorrelate batches of triplets, that jointly balances informativeness and diversity while decoupling the choice of heuristic for each criterion. Experiments indicate our method is general, adaptable, and outperforms the state-of-the-art.

2019

- IEEE WHC

PerceptNet: Learning perceptual similarity of haptic textures in presence of unorderable tripletsPriyadarshini Kumari, Siddhartha Chaudhuri, and Subhasis ChaudhuriIn IEEE World Haptics Conference (WHC), 2019

PerceptNet: Learning perceptual similarity of haptic textures in presence of unorderable tripletsPriyadarshini Kumari, Siddhartha Chaudhuri, and Subhasis ChaudhuriIn IEEE World Haptics Conference (WHC), 2019In order to design haptic icons or build a haptic vocabulary, we require a set of easily distinguishable haptic signals to avoid perceptual ambiguity, which in turn requires a way to accurately estimate the perceptual (dis)similarity of such signals. In this work, we present a novel method to learn such a perceptual metric based on data from human studies. Our method is based on a deep neural network that projects signals to an embedding space where the natural Euclidean distance accurately models the degree of dissimilarity between two signals. The network is trained only on non-numerical comparisons of triplets of signals, using a novel triplet loss that considers both types of triplets that are easy to order (inequality constraints), as well as those that are unorderable/ambiguous (equality constraints). Unlike prior MDS-based non-parametric approaches, our method can be trained on a partial set of comparisons and can embed new haptic signals without retraining the model from scratch. Extensive experimental evaluations show that our method is significantly more effective at modeling perceptual dissimilarity than alternatives.

2017

- Book

Cultural heritage objects: Bringing them alive through virtual touchSubhasis Chaudhuri and Priyadarshini KumariIn Digital Hampi: Preserving Indian Cultural Heritage, 2017

Cultural heritage objects: Bringing them alive through virtual touchSubhasis Chaudhuri and Priyadarshini KumariIn Digital Hampi: Preserving Indian Cultural Heritage, 2017

2016

- EuroHaptics

Haptic Rendering of Thin, Deformable Objects with Spatially Varying StiffnessPriyadarshini Kumari and Subhasis ChaudhuriIn EuroHaptics, 2016

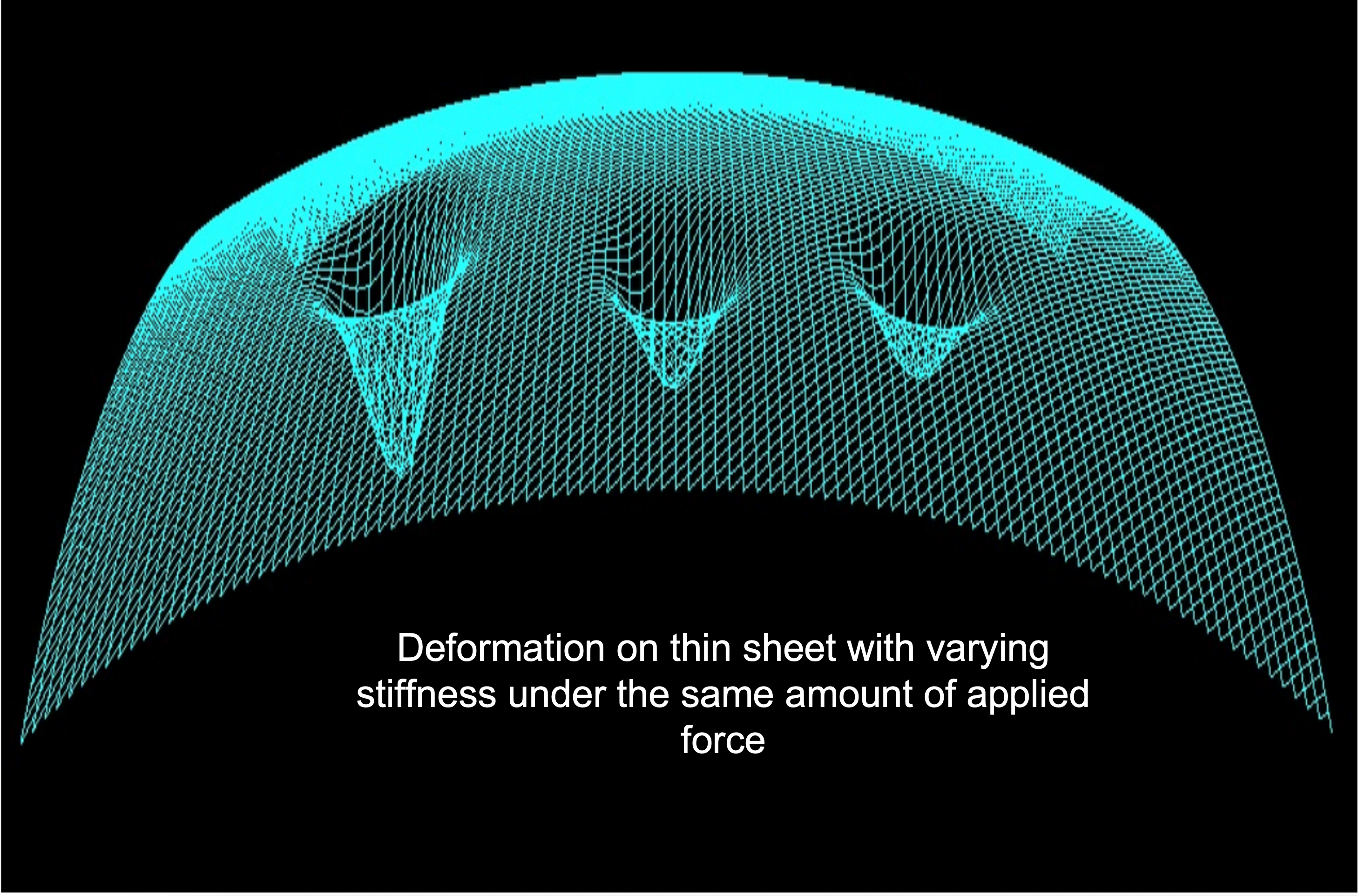

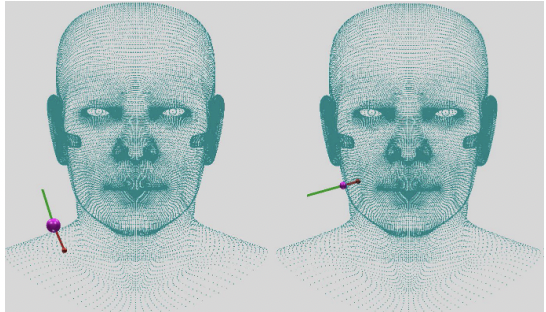

Haptic Rendering of Thin, Deformable Objects with Spatially Varying StiffnessPriyadarshini Kumari and Subhasis ChaudhuriIn EuroHaptics, 2016In real world, we often come across with soft objects having spatially varying stiffness such as human palm or a wart on the skin. In this paper, we propose a novel approach to render thin, deformable objects having spatially varying stiffness (inhomogeneous material). We use the classical Kirchhoff thin plate theory to compute the deformation. In general, physics based rendering of an arbitrary 3D surface is complex and time consuming. Therefore, we approximate the 3D surface locally by a 2D plane using an area preserving mapping technique - Gall-Peters mapping. Once the deformation is computed by solving a fourth order partial differential equation, we project the points back onto the original object for proper haptic rendering. The method was validated through user experiments and was found to be realistic.

2014

- ACCVw

Combined hapto-visual and auditory rendering of cultural heritage objectsPraseedha Krishnan Aniyath, Sreeni Kamalalayam Gopalan, Priyadarshini Kumari, and 1 more authorIn ACCVw, 2014

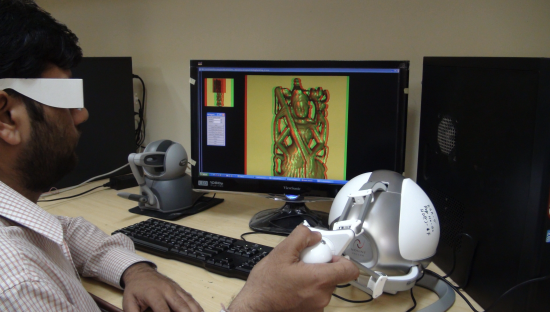

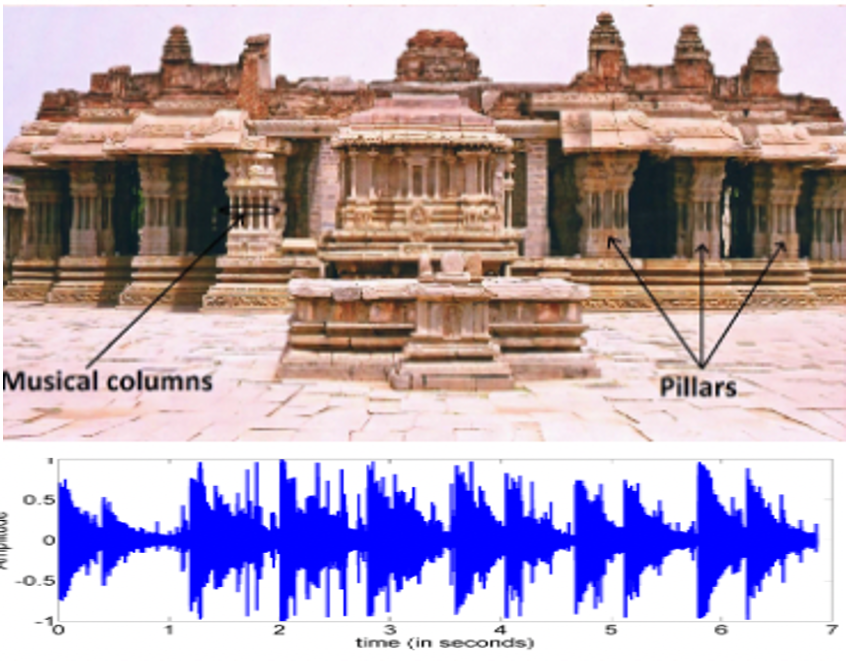

Combined hapto-visual and auditory rendering of cultural heritage objectsPraseedha Krishnan Aniyath, Sreeni Kamalalayam Gopalan, Priyadarshini Kumari, and 1 more authorIn ACCVw, 2014In this work, we develop a multi-modal rendering framework comprising of hapto-visual and auditory data. The prime focus is to haptically render point cloud data representing virtual 3-D models of cultural significance and also to handle their affine transformations. Cultural heritage objects could potentially be very large and one may be required to render the object at various scales of details. Further, surface effects such as texture and friction are incorporated in order to provide a realistic haptic perception to the users. Moreover, the proposed framework includes an appropriate sound synthesis to bring out the acoustic properties of the object. It also includes a graphical user interface with varied options such as choosing the desired orientation of 3-D objects and selecting the desired level of spatial resolution adaptively at runtime. A fast, point proxy-based haptic rendering technique is proposed with proxy update loop running 100 times faster than the required haptic update frequency of 1 kHz. The surface properties are integrated in the system by applying a bilateral filter on the depth data of the virtual 3-D models. Position dependent sound synthesis is incorporated with the incorporation of appropriate audio clips.

2013

- IEEE WHC

Scalable rendering of variable density point cloud dataPriyadarshini Kumari, KG Sreeni, and Subhasis ChaudhuriIn IEEE World Haptics Conference (WHC), 2013

Scalable rendering of variable density point cloud dataPriyadarshini Kumari, KG Sreeni, and Subhasis ChaudhuriIn IEEE World Haptics Conference (WHC), 2013In this paper, we present a novel proxy based method of adaptive haptic rendering of a variable density 3D point cloud data at different levels of detail without pre-computing the mesh structure. We also incorporate features like rotation, translation and friction to provide a better realistic experience to the user. A proxy based rendering technique is used to avoid the pop-through problem while rendering thin parts of the object. Instead of a point proxy, a spherical proxy of variable radius is used which avoids the sinking of proxy during the haptic interaction of sparse data. The radius of the proxy is adaptively varied depending upon the local density of the point data using kernel bandwidth estimation. During the interaction, the proxy moves in small steps tangentially over the point cloud such that the new position always minimizes the distance between the proxy and the haptic interaction point (HIP). The raw point cloud data re-sampled in a regular 3D lattice of voxels are loaded to the haptic space after proper smoothing to avoid aliasing effects. The rendering technique is experimented with several subjects and it is observed that this functionality supplements the user’s experience by allowing the user to interact with an object at multiple resolutions.

2012

- EuroHaptics

Haptic rendering of cultural heritage objects at different scalesKG Sreeni, Priyadarshini Kumari, AK Praseedha, and 1 more authorIn EuroHaptics, 2012

Haptic rendering of cultural heritage objects at different scalesKG Sreeni, Priyadarshini Kumari, AK Praseedha, and 1 more authorIn EuroHaptics, 2012In this work, we address the issue of virtual representation of objects of cultural heritage for haptic interaction. Our main focus is to provide a haptic access of artistic objects of any physical scale to the differently abled people. This is a low-cost system and, in conjunction with a stereoscopic visual display, gives a better immersive experience even to the sighted persons. To achieve this, we propose a simple multilevel, proxy-based hapto-visual rendering technique for point cloud data which includes the much desired scalability feature which enables the users to change the scale of the objects adaptively during the haptic interaction. For the proposed haptic rendering technique the proxy updation loop runs at a rate 100 times faster than the required haptic updation frequency of 1KHz. We observe that this functionality augments very well to the realism of the experience.